Since the inauguration of Arrhythmia & Electrophysiology Review in 2012, one of the most consistent, and persistent, concerns expressed both by authors and reviewers has been the issue of our impact factor and when should we get one. This is a legitimate question: publishing in a citable journal of considerable impact factor justifies the efforts of the authors and secures credible publicity of their scientific work.

Journal impact factor (JIF) is a citation metric designed in 1955 by Eugene Garfield, the founder of the Institute for Scientific Information, to help librarians prioritise their purchases of the most important journals.1 It was inspired by Shepard’s Citations, an American citation index of legal resources that began in 1873, and which allows lawyers to locate the publications citing a particular case and legal decisions influenced by a case. The idea of quantifying impact by counting citations led to the creation of the prestigious journal rankings, which have been recorded annually in the Science Citation Index since 1961. JIFs are calculated by Clarivate Analytics and published annually in Journal Citation Reports and measure the average impact of articles published in a journal with a citation window of 1 year. The formula for calculating JIF is the total number of citations in a year, divided by the total number of ‘citable’ articles published by the journal during the 2 preceding years. To obtain a JIF, a journal must be accepted by Clarivate Analytics’ citation databases, such as the Science Citation Index Expanded, and remain in the system for at least 3 years.2

JIF has several shortcomings and limitations. Editorials and letters are non-citable items and are excluded from the JIF denominator, but these items, particularly in modern biomedicine, contain long lists of references, affecting the JIF calculations in many ways. In addition, it restricts citations of recent articles, because JIF only considers the first 2 years after a study is published. That is, if a journal has articles cited later, they will not affect the impact. Citations of journals not indexed in the Web of Science are not considered, regardless of their potential importance. Last, but not least, there has been a lack of transparency in the JIF calculations, partly due to the lack of open access to the citations tracked by the databases used by Thomson Reuters (the previous owner).3 All these have damaged the reputation of the JIF as a reliable and reproducible scientometric tool.

There has been a growing unease within the scientific community, among journal publishers and within funding agencies that the widespread use of JIFs to measure the quality of research is detrimental for science itself.3–5 The San Francisco Declaration on Research Assessment, initiated by the American Society for Cell Biology together with editors and publishers, calls for moving away from using JIFs to evaluate individual scientists or research groups and developing more reliable ways to measure the quality and impact of research. One such method is the new Relative Citation Ratio that is now being used by the US National Institutes of Health.4

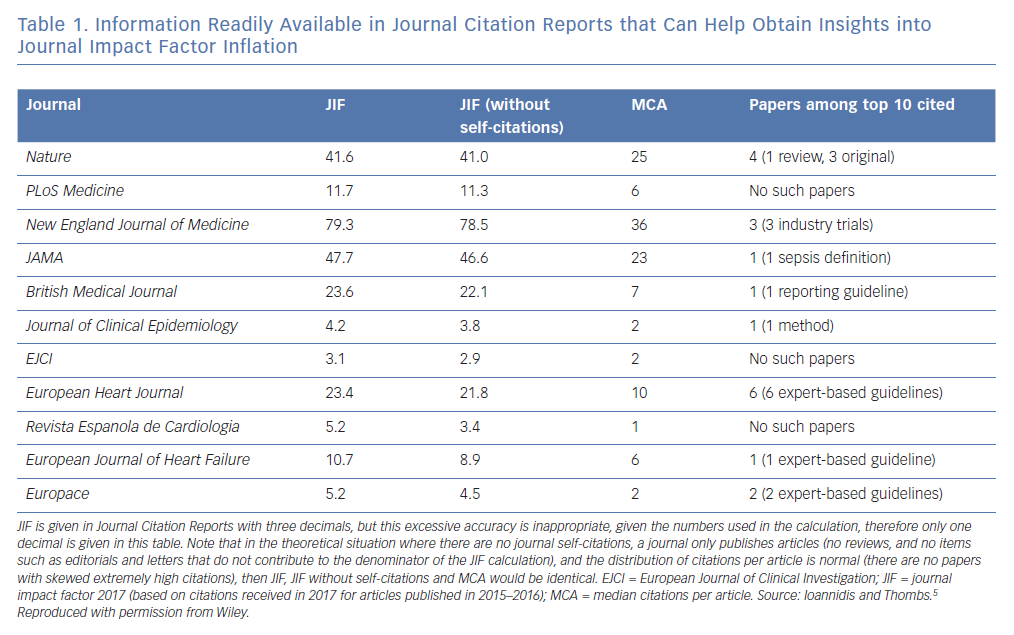

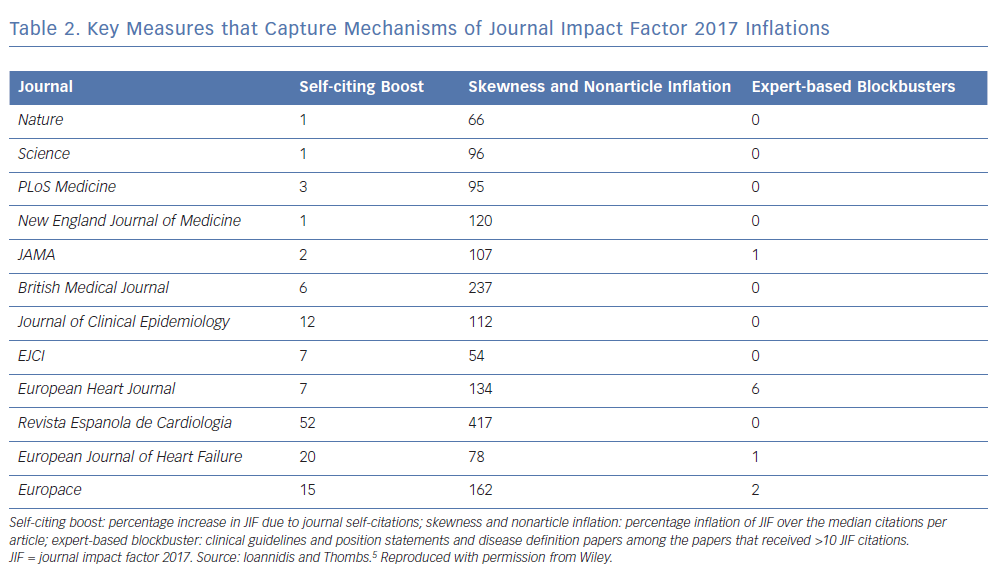

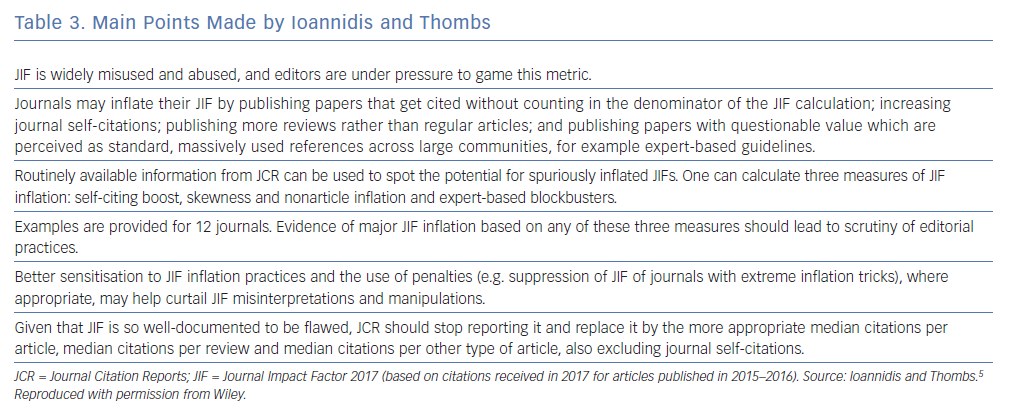

In a recent, devastating review, Ioannidis and Thombs pronounced JIF as “without a doubt the most widely used, misused and abused bibliometric index in academic science”, adding that “JIF is a highly flawed, easily gameable metric.”5 They recommended its replacement with Median Citations per Item indicators calculated separately for articles, reviews and other types of papers (Tables 1–3).

However, one should keep in mind that all bibliometric numbers are only a proxy of research quality, which measure one part of quality, namely impact or resonance. Despite its many limitations, JIF is still a credible marker and no serious scientific journal can thrive without it. Approximately 11,000 academic journals are currently listed in Journal Citation Reports and JIF.

A 2003 survey of physicians specialising in internal medicine in the US provided evidence that despite its shortcomings, impact factor may be a valid indicator of quality for general medical journals, as judged by both practitioners and researchers in internal medicine.6 Until it dies or is killed, therefore, JIF is here to stay.

Arrhythmia & Electrophysiology Review is taking its final steps towards this goal, and we hope for a respectable IF in 2020. Having announced that, we do know that our reputation is better served by the quality of the articles published and the high esteem in which senior and junior members of the electrophysiology community hold the journal.